Economic Risk Simulation Engine for Compound v3

First and foremost, I’d like to thank @allthecolors and the CGP team and for his valuable contributions and data inputs for this proposal.

Note that this proposal has been accepted and can be viewed at Questbook.

Application Criteria under Project Ideas in RFP

- Open-source risk frameworks and associated analysis

- Governance research, analysis, and integrations in the context of Compound’s deployment of Comet contracts on mainnet and other chains

Summary

Chainrisk is proposing to onboard the Compound community into its risk and simulation platform to test Compound V3 (Comet) Modules ( Interest Rates, Collateral and Borrowing, and Liquidations Module ), and new protocol upgrades in various market scenarios.This platform will support the community in stress testing the mechanism design of the protocol and bespoke protocol research with publicly available analysis and results.

Company Background

Chainrisk specializes in economic security, offering a unified simulation platform designed for teams to efficiently test protocols, particularly in challenging market conditions. Our technology is anchored by a cloud-based simulation engine driven by agents and scenarios, enabling users to create tailored market situations for comprehensive risk assessment.

Our team comprises experts with diverse backgrounds in Crypto, Security, Data Science, Economics, and Statistics, bringing valuable experience from institutions such as Ethereum Foundation, NASA, JP Morgan, Deutsche Bank, Polygon, Nethermind, and Eigen Layer.

The Chainrisk Cloud simulation platform mirrors the mainnet environment as closely as possible. Each simulation runs forks from a specified block height, ensuring up-to-date account balances and the latest smart contracts and code deployed across DeFi. This holistic approach is crucial for understanding how external factors such as cascading liquidations, oracle failure, gas fees, and liquidity crises can impact a protocol in various scenarios.

With grant funding, our focus is onboarding Compound onto the Chainrisk Cloud simulation engine. Additionally, we aim to develop and deploy multiple statistical features in subsequent milestones. Our goal is to analyze and verify that Compound modules can withstand high market stress.

The Proposal

To enhance risk coverage of the Compound protocol and transparency to the community, we’d propose tooling to cover a few major areas:

-

Risk parameters for all Compound V3 markets ( Borrow Collateral Factor, Liquidation Collateral Factor, Liquidation Factor, Collateral Safety Grade, Supply Cap, Target Reserves, Store Front Price Factor, Liquidator Points, Interest Rate Curves) across Ethereum, Polygon, Base and Arbitrum.

-

Coverage of base asset (USDC) and all collateral assets (WBTC, WETH, LINK, UNI, COMP)

-

Parameter Optimization using Scaled Monte-Carlo Simulations (All within a SaaS)

-

An end-to-end Economic Audit Report

Economic Risk Management today is very boutique and blackboxed. We have come up with a Scalable Risk Management SaaS solution based on the ethos of verifiable simulations and proven statistical models.

The On-Chain Simulation Engine & Economic Security Index

The sole aim of Economic Security is to make Cost of Corruption > Profits.

From a security and infrastructure perspective, we recognize the need for additional tools to be built and maintained to bolster Compound’s security posture on top of the protections offered by teams like Gauntlet, OpenZeppelin, Trail of Bits, and Cetora.

Chainrisk delivers a groundbreaking, cloud-based simulation platform built on the philosophy that the most valuable testing environment closely resembles a real-world production environment.

On-chain Simulations

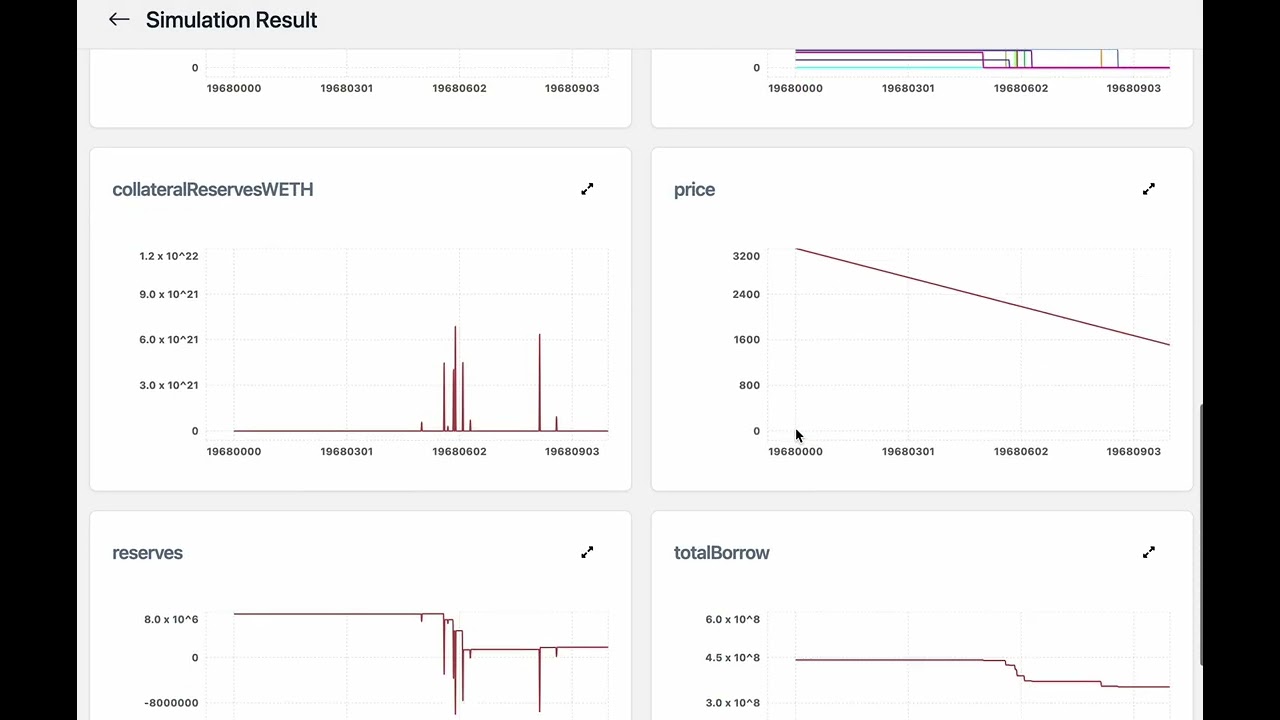

On-chain simulations create a fork of the blockchain from a designated block height and deploy a catalog of agents, scenarios, and observations within the Chainrisk Cloud environment. During on-chain simulations, Chainrisk Cloud executes a massive number of Monte Carlo simulations to evaluate the protocol’s Value at Risk (VaR) per market (chain) and across markets.

Agents represent user behavior, allowing for the emulation of diverse protocol user actions. The Chainrisk Scenario Catalog empowers control over macro variables and conditions such as gas prices, DEX and protocol liquidity, oracle return values, significant market events like Black Thursday, and more. Observers facilitate in-depth protocol analysis and yield more insightful simulations.

Through this robust software, users can control and test a host of different factors that can impact protocol security and user funds, including:

- Oracle data (e.g., asset prices, interest rates): This allows users to simulate how external data feeds can influence the protocol’s behavior.

- Transaction fees (gas prices): Users can test how varying gas costs impact user behavior and protocol functionality.

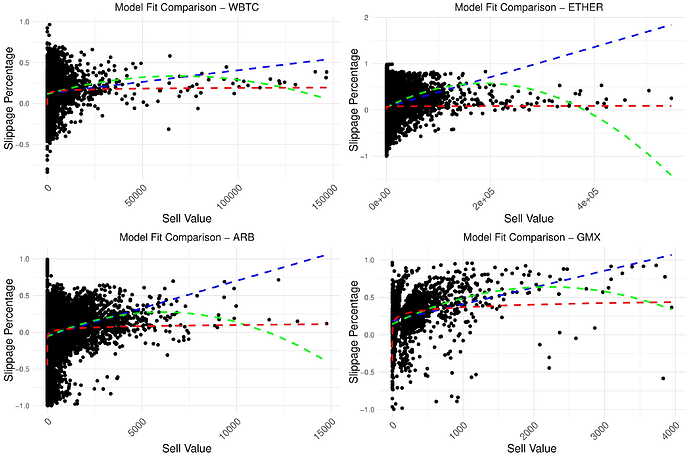

- Liquidation thresholds (price at which collateral is sold): Simulations can assess the protocol’s vulnerability to cascading liquidations triggered by price drops.

- Flash loan availability: The platform allows testing the impact of flash loans, a type of uncollateralized loan that can be used for arbitrage or manipulation.

Economic security testing and simulations via the Chainrisk Cloud platform allow you to test your protocol in different scenarios and custom environments to understand where your risks lie before a malicious actor can exploit them. Some examples:

- Simulating Market Swings: Test how your protocol’s reserves handle periods of high volatility.

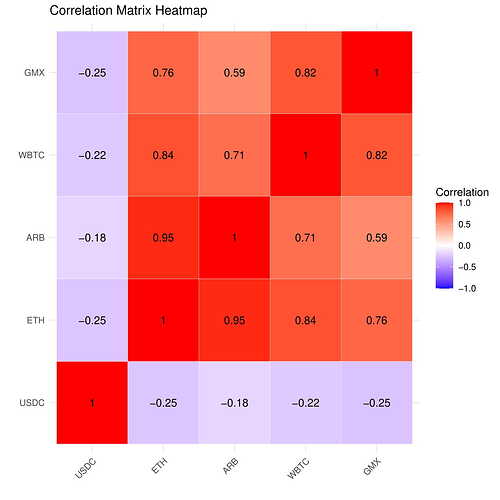

- Uncovering Asset Dependencies: Analyze how correlated assets impact liquidations.

- Stress Testing Price Crashes: Gauge the system’s response to dramatic price drops in terms of liquidations and overall liquidity.

- Evaluating Gas Fee Impact: Assess how high gas fees affect the efficiency of the liquidation process.

- Projecting New Asset Integration: Simulate the impact of introducing new borrowable assets on demand, revenue, and liquidations.

Economic Security Index

- Chainrisk’s Economic Security Index provides insights into the platform’s ability to withstand and mitigate economic exploits, such as market manipulation, liquidity shortages, and other risks that could impact the value and stability of the protocol and its users. Economic Security Index is a function of Loss Likelihood and the Expected Loss.

Previous work at Chainrisk

We have built an economic risk simulation engine for protocols built on top of all EVM compatible chains, where a protocol engineer can create granular simulations to test their mechanism design. We are working with a couple of protocols on the Ethereum Ecosystem namely, Angle Protocol and Gyroscope.

Our sandboxed environment runs on top of the Rust EVM. We aim to enable Simulation-driven Development and run Agent-based Simulations for the Compound Ecosystem.

Research Work at Chainrisk -

Work with Angle Protocol

Context & Background -

Angle liquidation system is conceived as an improvement over more traditional liquidation mechanisms. It allows for variable liquidations amounts meaning that during a liquidation the fraction of the debt that can be repaid is not fixed.

On top of that, discounts given to liquidators are based on a Dutch auction mechanism which minimises the discount liquidators get while making sure they can still be profitable. This protects borrowers getting liquidated and lets them keep a maximum amount of collateral in their vaults.

Auctions and liquidations are a primary functionality responsible for the stability of Angle and agEUR and hence must be thoroughly tested and verified.

Angle <> Chainrisk Deck - Angle <> Chainrisk (Presentation)

Integration Specifics with Angle - https://twitter.com/chain_risk/status/1753358118573183032?t=u9MBIT2FIImHRPV3dDwLEA

Angle Collaboration Video - https://www.youtube.com/watch?v=zvHV5qRUZx0

Funding disbursement schedule

Sprint Goals for First 2-3 weeks / Milestone #1

Goal: To build a robust, dynamic, Agent-based Scenario-based simulation engine for Compound where protocol engineers can create simulations in a sandboxed environment to stress test their mechanism design, integrate a built-in analytics dashboard and a block explorer.

Objectives and Specific Commits:

-

Integrate the simulation engine with Compound:

- Developers shall be able to create a mainnet/testnet fork at user defined block height and make RPC calls

- Write, store and edit js/ts scripts of Agents, Scenarios, Observers, Assertions, and Contracts.

- Create and Configure agent-based simulations to test specific features, strategies and mechanisms ( Liquidations, Oracle Manipulations etc ).

- Run these simulations on-chain across user-defined block length.

- Analyse the simulation results through the Observers on the built-in analytics and make decisions on the right set of risk parameters ( Borrow Collateral Factor, Liquidation Collateral Factor, Liquidation Factor, Collateral Safety Grade, Supply Cap, Target Reserves, Store Front Price Factor, Liquidator Points, Interest Rate Curves ).

-

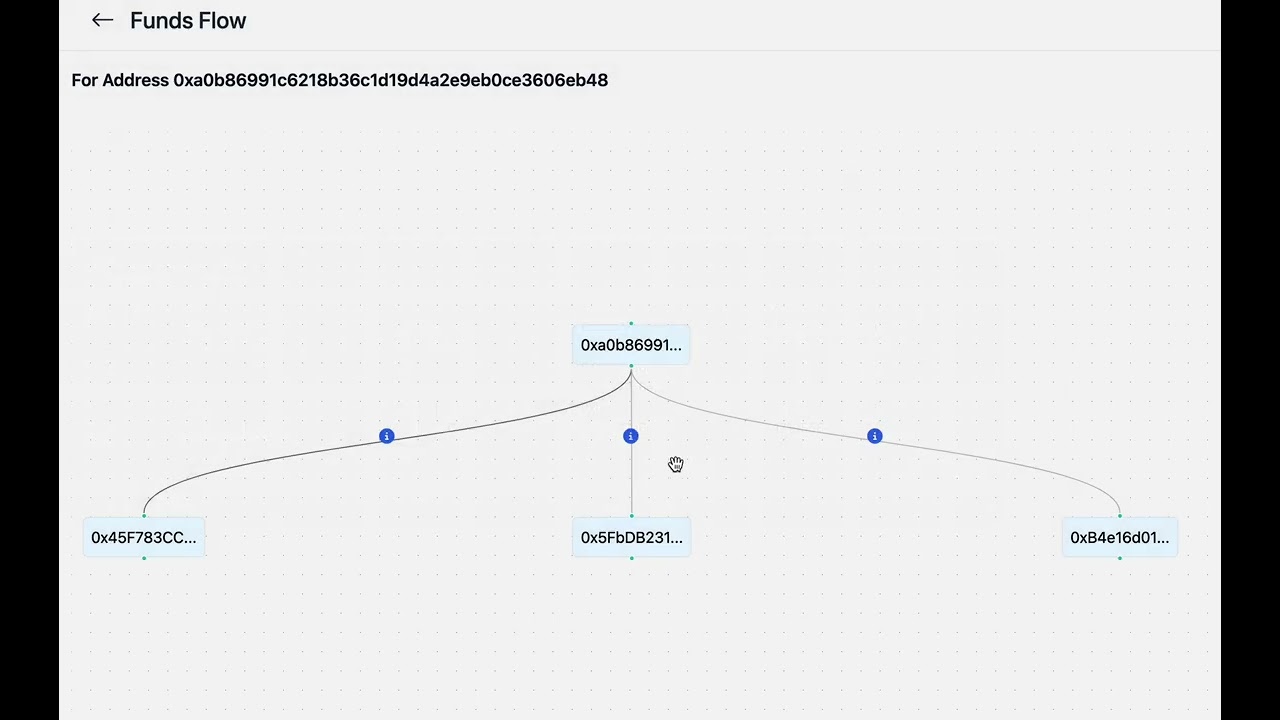

Block Explorer and Transaction tracing:

- Integrate an in-built block explorer with the simulation engine to show the blocks mined, transactions executed, contracts triggered and events logged during the simulations.

*Add detailed transaction tracing feature which will show the flow of funds, and break down the transactions to the opcode level.

- Integrate an in-built block explorer with the simulation engine to show the blocks mined, transactions executed, contracts triggered and events logged during the simulations.

-

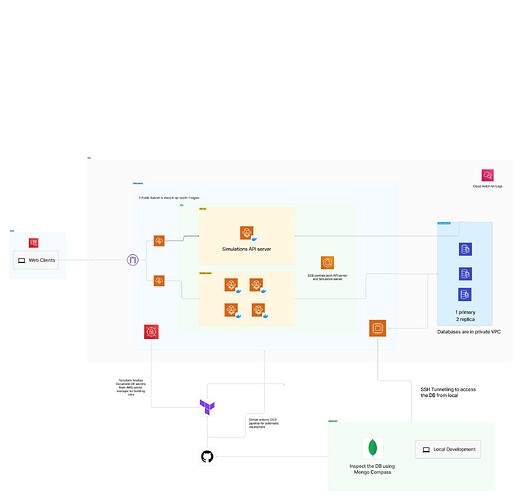

Dynamic backend (protocol agnostic):

The goal here is to make a singleton backend that can stress-test any DeFi protocol through Js/Ts scripts of Agents, Scenarios, Observers, Assertions, and contracts and reduce the dependencies.

- Developing modules and auxiliary tools for Agents, Scenarios, Observers, Assertions, and contracts to seamlessly communicate without manual intervention.

- Make the block explorer dynamic capable of decoding transactions within each simulation block for comprehensive analysis.

-

Release beta-testing version on a single server :

- Release a beta-testing version where Chainrisk Team works on specific simulations closely with Compound community and inculcates the feedback received.

- This is a single-node server that will be able to run granular simulations.

Reward after 1st Milestone - $5000

Sprint Goals for next 3-4 weeks / Milestone #2

Goal: Finalise all the features of the simulation engine, implement security measures, create granular simulations to test Borrowing, Liquidation and Interest Rate Modules of Compound, engage with the community, and prepare comprehensive documentation.

Objectives and Specific Commits:

-

Implement Two-Factor user authentication :

- We will be using the Amplify and AWS Cognito pool to implement Multifactor auth.

- The multi-factor auth will consist of a username (email or wallet), password, and any Authenticator App (TOTP-based).

-

Implement CI/CD Integrations and deploy the full app on the cloud :

- We shall use AWS Rust SDK and Terraform to deploy the whole infra on AWS ECS and Fargate along with AWS Kinesis, AWS Glue, and Redshift for Data Analysis. This system will be able to run 1000s of simulations parallelly for long hours.

- When a simulation is configured, every Agent, Scenario, Observer, and Assertion code will run on the same containers packed together. The container life cycle will be the same as the simulation life span and will be run by ECS.

- We will be also using DynamoDB as the main DB, S3 as Data lake and Redshift as Data Warehouse for storing all the data this will also give a super fast web app experience and AWS Streams and Lambda for event-driven workloads or database triggers.

-

Create Agent Based Simulations to test out different modules of Compound :

- Once cloud deployment is done, we will be testing our platform for parallel 1000s of simulations running at the same time ensuring the scalability and robustness of the platform.

- Assign a group of researchers/data scientists from Chainrisk Team to generate risk scenarios akin to Black Thursday

- Prepare multiple scripts for Agent, Scenario, Observer, Assertion, and Smart contracts for the protocol to test their mechanism design

- Take developer feedback on the platform, fix outstanding bugs, and implement features suggested by Compound community

-

Prepare Comprehensive Documentation:

- Along the way we will be preparing comprehensive risk management docs for the simulations that we run for Compound.

- Also we will be delivering architectural diagrams for more clarity.

-

Governance Risk Calls:

To deepen our commitment to community engagement and strengthen protocol security, Chainrisk will host monthly risk calls for the Compound community. These calls will focus on discussing:

- New risk tooling and analysis

- New Asset Listing Proposals

- Protocol launches and technical

- The broader market environment

- Anything else the community deems important and relevant for discussion

To facilitate ongoing risk assessment, we propose establishing monthly recurring calls. These calls will be held for a dedicated hour and will be supplemented by additional ad-hoc community calls when critical risk issues arise. Recordings and summaries of all calls will be made available for reference.

Reward after 2nd Milestone - $10,000

Sprint Goals for final 4-6 weeks / Milestone #3

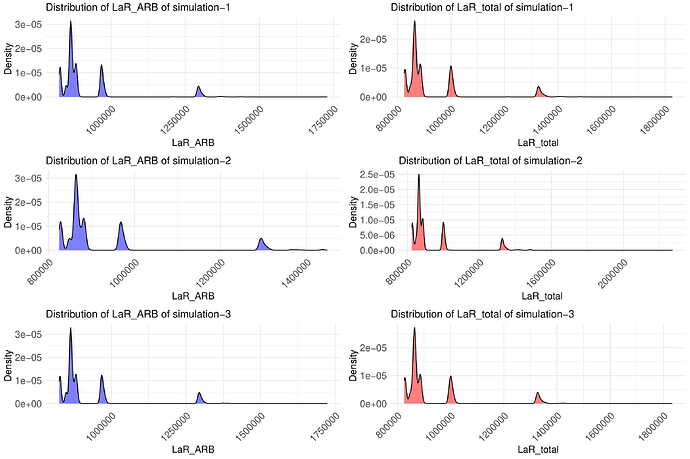

Goal: Redesign the backend and cloud infrastructure to be able to run Monte-Carlo Simulations at scale and provide static parameter recommendations and perform an end-to-end economic audit

Objectives and Specific Commits:

-

Enable creation of stochastic price paths:

- Develop an algorithm to generate random price paths based on GARCH parameters such as volatility clustering and time-varying volatility.

- Validate the accuracy and statistical properties of the generated price paths against historical data.

- Develop and integrate the GARCH price oracle contract to the backend.

-

Enable running thousands of simulations simultaneously:

- Dockerize service and use kubernetes to orchestrate containers.

- Distribute containers across multiple machines in the cloud.

-

Integrate bespoke visualisation library:

- Create a backend endpoint for the visualisation library to query observer data for plotting.

- Create a python fastapi server to receive HTTP requests from the frontend for visualisations.

- Dockerize service for easy deployment.

-

Enable longer simulations:

- Increase transaction throughput by making optimizations to customised anvil infrastructure and revm

- Minimise networking with a remote node to avoid HTTP timeouts

-

End-to-End Economic Audit

- We test whether your protocol parameters or financial modelling can withstand the blow of past economic exploits and market manipulations via thousands of monte-carlo simulations.

- Analyse VaR, LaR and Borrowing Power under market duress (i.e. Black Thursday).

- As a conclusion, we recommend parameters based on our statistical inferences. These recommendations are made to make the incentives/profit of the attacker minimal or none.

- We will finally release a detailed economic audit report.

Reward after 3rd Milestone - $10,000

Measures of Success/KPIs

We will measure things such as:

- Product deliverables ( As per milestones )

- Improvement in the values of VaR, LaR and Borrowing Power (without affecting protocol solvency) post our recommendations

- Community NPS of our relationship

- Communication and transparency to the community on work done and product access

Budget Breakdown:

Team Costs - $21000 for 525 Man-hours ( $40/Man-hour ) over 3 months

For -

- One Data Scientist

- One Protocol Economist

- One Cloud Engineer

- One Core Backend Developer

- One Security Researcher

Tech Costs - $4000

Where are we heading with this grant?

Open Source -

Yes we shall open source certain sections of the work. We will be creating simulations for the protocol during our engagement (mentioned in the 2nd Milestone). The agents, scenarios, observers, assertions and contracts we create during those simulations can be open sourced for the greater good of the community.

However, the backend of the platform shall remain closed-source due to security and proprietary reasons.

Follow on Grants for Maintenance and Updates -

Post the completion of our first grant engagement, we would like to move ahead with a bigger follow-on grant that’ll help us cover the maintenance costs, cloud compute costs and HR ( dev + research ) costs of managing and improving the platform over time.

We are of the opinion that a lot more enhancements can be done to secure Compound from impending economic exploits and market risks.

Project Roadmap in next 12 months -

In the next 12 months, we have targets of implementing the following features to deliver a Full Stack Risk Management platform to the Compound Ecosystem:-

-

LLM Models for Data Query and Visualisations on Simulations

-

Bayesian Learning Models for Parameter Recommendations

-

User Risk Monitoring Dashboard

-

Economic Alerts

About the Team

Sudipan Sinha ( Co-Founder and Chief Executive Officer ):

-

MTech in Mathematics and Computing at IIT BHU

-

Top Security researcher at HackerOne, Bug Crowd

-

Ex-Chief White Hat at DetectBox

-

Tech and Economics Mentor at EthGlobal 2023

-

2x Ethereum Foundation Grantee & 1x StarkNet Foundation Grantee

-

Part of the Antler India Fellowship 2023 ( Top 5 / 3k+ founders )

Arka Datta ( Co-Founder and Chief Product Officer ):

-

Grad @ IIT Guwahati

-

Fellow @ IISc Bangalore

-

Part of the prestigious Polygon Fellowship 2022 (Top 50/ 10K + builders)

-

Prior getting into Web3, Arka has worked as a Software Engineer at Walmart working on various security products

-

Previously co-founded DetectBox - world’s first decentralised smart contract audit marketplace with Sudipan, backed by Antler India Fellowship, Ethereum Foundation and Starknet Foundation

-

2x Ethereum Foundation Grantee & 1x StarkNet Foundation Grantee

-

Part of the Antler India Fellowship 2023 ( Top 5 / 3k+ founders )

Abhimanyu Nag ( Head of Economics Research ):

-

Previously worked at Nethermind, the Ethereum giant, as a Data Scientist and HyperspaceAI, then Supercomputing now Decentralised LLM specialists, as a Protocol Economist and AI researcher.

-

An International Centennial Scholarship holder at the University of Alberta, he co wrote the Ethereum Improvement Proposal 5133 which decided the date of Ethereum’s Merge on 15th of September, 2022 and as a result, has his name cited in the Ethereum Yellow Paper.

-

He also developed the data modelling and analytics of TwinStake - an institutional staking service developed in partnership between Nethermind and Brevan Howard Investment Fund.

-

He has given talks about his work at maths research conferences and also the Ethereum Merge Watch party by Nethermind.

-

He played a key role in pushing HyperspaceAI to reach a $500 million valuation within 6 months of joining and helped the startup land a pitch at Ethereum Community Conference in France in 2023.

-

He also was part of the EigenLayer Research Fellowship hackathon and developed an innovative currency exchange system on EigenLayer.

-

His main research has always been in Applied Mathematics and Statistics. He is co writing three papers on Hidden Markov Modelling on tumour progression and Health Insurance Fraud along with Professors at the University of Alberta and he also recently solved a new proof of the Saint Venant Theorem, to be published shortly.

-

He was also a reviewer at the The 4th International Conference on Mathematical Research for Blockchain Economy.

Siddharth Patel ( Core Blockchain Developer ):

-

Previously worked at Antier Solutions, Asia’s largest blockchain development firm, Siddharth has extensive experience in working on smart contracts and backend development for multiple complex DeFi protocols, including staking, yield farming, DEX, and various token types, collectively managing assets exceeding $8 million.

-

He possesses hands-on experience in building various NFT standards and marketplaces, as well as contributing to projects involving account abstraction and asset tokenization.

-

He has been curiously participating in the blockchain industry for over 4 years and won multiple hackathons including a bounty at EthGlobal’s SuperHack '23 hackathon for developing CoinFort, a zk-based account abstracted wallet (https://ethglobal.com/showcase/coinfort-d4vw7).

Rajdeep Sengupta ( Frontend & Cloud Engineer )

-

Previously worked at several startups - Immplify, DryF, and DetectBox.

-

Worked as a core team member in Immplify in the app architecture part. Worked with multiple services of GCP during that time like Firebase, rtdb, cloud functions, cloud run, IAM, API gateway, KMS and much more.

-

During his time at DryFi, he worked with AWS, DynamoDB, NextJs, lambda functions API gateway tailwind and more.

-

He has been a contributor at Layer 5 and worked with AWS, GCP, and Azure.

-

Won national hackathon Ethos. Have been within top 10 finalists in over 6+ international hackathons.

Saurabh Sharma ( Head Of Risk Engineering ) :

-

Grad School @ IIT Bombay

-

Ex. Data Scientist at Safe Securities, Worked on Quantifying web2.0 Cyber risks, building and implementing various Bayesian and Monte Carlo models

-

Did a Research aimed to build Quantum Computers using phonons(quantised particles of Sound) and build a prototype

-

Data Science at Nexprt, worked on analysing Home decor Data, targeting potential Countries for trade

-

Fellow @ IISc Bangalore

-

Currently working on a research along with IITB peers related to Quantum Error Correction Codes and Quantum Cryptography

Samrat Gupta ( Senior Security Researcher ):

-

Proven experience in smart contract auditing, web application security and web pentesting.

-

Participated in competitive audit platforms like Code4rena, Sherlock and CodeHawks and found 100+ high and medium vulnerabilities and secured $100M+ of user funds.

-

Worked on 3 independent audits on DetectBox platform for Kunji Finance, Etherverse and Payant escrow.

-

Worked at QuillAudits - a leading web3 audit firm as a smart contract auditor and security researcher, where he led QuillCTF.

X ( Twitter ) - https://twitter.com/chain_risk

Linkedin - Chainrisk | LinkedIn

Stay Tuned For Regular Updates ![]()